前言

大家好,我是明哥!

本片博文是“大数据问题排查系列”之一,讲述某HIVE SQL 作业因为 HIVE 中的元数据与 HDFS中实际的数据不一致引起的一个问题的排查和修复。

以下是正文。

问题现象

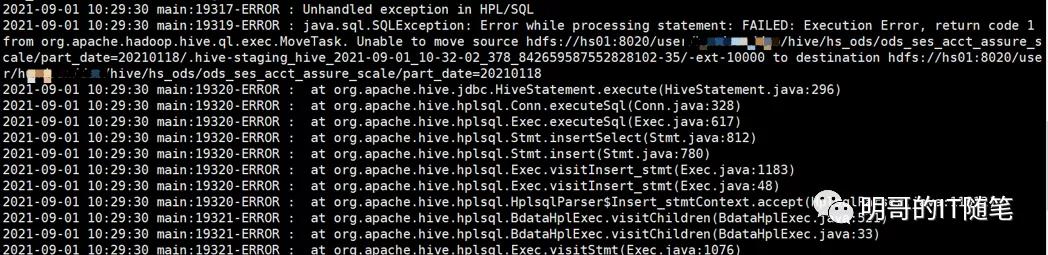

客户端报错如下:

Unable to move source xxx to destination xxx

客户端报错

问题分析

客户端的报错信息,并没有完全展现问题背后的全貌。我们进入 hiveserver2 所在节点查看hiveserver2的日志,可以看到如下相关信息:

2021-09-01 11:47:46,795 INFO org.apache.hadoop.hive.ql.exec.Task: [HiveServer2-Background-Pool: Thread-1105]: Loading data to table hs_ods.ods_ses_acct_assure_scale partition (part_date=20210118) from hdfs://hs01:8020/user/hundsun/dap/hive/hs_ods/ods_ses_acct_assure_scale/part_date=20210118/.hive-staging_hive_2021-09-01_11-47-31_216_694180642957006705-35/-ext-10000 2021-09-01 11:47:46,795 INFO hive.metastore: [HiveServer2-Background-Pool: Thread-1105]: HMS client filtering is enabled. 2021-09-01 11:47:46,795 INFO hive.metastore: [HiveServer2-Background-Pool: Thread-1105]: Trying to connect to metastore with URI thrift://hs01:9083 2021-09-01 11:47:46,795 INFO hive.metastore: [HiveServer2-Background-Pool: Thread-1105]: Opened a connection to metastore, current connections: 54 2021-09-01 11:47:46,796 INFO hive.metastore: [HiveServer2-Background-Pool: Thread-1105]: Connected to metastore. 2021-09-01 11:47:46,928 INFO org.apache.hadoop.hive.ql.exec.MoveTask: [HiveServer2-Background-Pool: Thread-1105]: Partition is: {part_date=20210118} 2021-09-01 11:47:46,945 INFO org.apache.hadoop.hive.common.FileUtils: [HiveServer2-Background-Pool: Thread-1105]: Creating directory if it doesn't exist: hdfs://hs01:8020/user/hundsun/dap/hive/hs_ods/ods_ses_acct_assure_scale/part_date=20210118 2021-09-01 11:47:46,947 ERROR hive.ql.metadata.Hive: [HiveServer2-Background-Pool: Thread-1105]: Failed to move: {} 2021-09-01 11:47:46,947 ERROR org.apache.hadoop.hive.ql.Driver: [HiveServer2-Background-Pool: Thread-1105]: FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.MoveTask. Unable to move source hdfs://hs01:8020/user/hundsun/dap/hive/hs_ods/ods_ses_acct_assure_scale/part_date=20210118/.hive-staging_hive_2021-09-01_11-47-31_216_694180642957006705-35/-ext-10000 to destination hdfs://hs01:8020/user/hundsun/dap/hive/hs_ods/ods_ses_acct_assure_scale/part_date=20210118 2021-09-01 11:47:46,948 INFO org.apache.hadoop.hive.ql.Driver: [HiveServer2-Background-Pool: Thread-1105]: Completed executing command(queryId=hive_20210901114731_d7a78302-fb2a-4b45-9472-db6a9787f710); Time taken: 15.489 seconds 2021-09-01 11:47:46,957 ERROR org.apache.hive.service.cli.operation.Operation: [HiveServer2-Background-Pool: Thread-1105]: Error running hive query: org.apache.hive.service.cli.HiveSQLException: Error while processing statement: FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.MoveTask. Unable to move source hdfs://hs01:8020/user/hundsun/dap/hive/hs_ods/ods_ses_acct_assure_scale/part_date=20210118/.hive-staging_hive_2021-09-01_11-47-31_216_694180642957006705-35/-ext-10000 to destination hdfs://hs01:8020/user/hundsun/dap/hive/hs_ods/ods_ses_acct_assure_scale/part_date=20210118 at org.apache.hive.service.cli.operation.Operation.toSQLException(Operation.java:329) ~[hive-service-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hive.service.cli.operation.SQLOperation.runQuery(SQLOperation.java:258) ~[hive-service-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hive.service.cli.operation.SQLOperation.access$600(SQLOperation.java:92) ~[hive-service-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hive.service.cli.operation.SQLOperation$BackgroundWork$1.run(SQLOperation.java:345) [hive-service-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at java.security.AccessController.doPrivileged(Native Method) ~[?:1.8.0_271] at javax.security.auth.Subject.doAs(Subject.java:422) [?:1.8.0_271] at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1875) [hadoop-common-3.0.0-cdh6.3.2.jar:?] at org.apache.hive.service.cli.operation.SQLOperation$BackgroundWork.run(SQLOperation.java:357) [hive-service-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) [?:1.8.0_271] at java.util.concurrent.FutureTask.run(FutureTask.java:266) [?:1.8.0_271] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [?:1.8.0_271] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [?:1.8.0_271] at java.lang.Thread.run(Thread.java:748) [?:1.8.0_271] Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: Unable to move source hdfs://hs01:8020/user/hundsun/dap/hive/hs_ods/ods_ses_acct_assure_scale/part_date=20210118/.hive-staging_hive_2021-09-01_11-47-31_216_694180642957006705-35/-ext-10000 to destination hdfs://hs01:8020/user/hundsun/dap/hive/hs_ods/ods_ses_acct_assure_scale/part_date=20210118 at org.apache.hadoop.hive.ql.metadata.Hive.getHiveException(Hive.java:3449) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hadoop.hive.ql.metadata.Hive.getHiveException(Hive.java:3405) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hadoop.hive.ql.metadata.Hive.moveFile(Hive.java:3400) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hadoop.hive.ql.metadata.Hive.replaceFiles(Hive.java:3697) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hadoop.hive.ql.metadata.Hive.loadPartitionInternal(Hive.java:1614) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hadoop.hive.ql.metadata.Hive.loadPartition(Hive.java:1525) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hadoop.hive.ql.metadata.Hive.loadPartition(Hive.java:1489) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hadoop.hive.ql.exec.MoveTask.execute(MoveTask.java:501) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hadoop.hive.ql.exec.Task.executeTask(Task.java:199) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hadoop.hive.ql.exec.TaskRunner.runSequential(TaskRunner.java:97) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hadoop.hive.ql.Driver.launchTask(Driver.java:2200) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hadoop.hive.ql.Driver.execute(Driver.java:1843) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:1563) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1339) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1334) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hive.service.cli.operation.SQLOperation.runQuery(SQLOperation.java:256) ~[hive-service-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] ... 11 more Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: org.apache.hadoop.hive.ql.metadata.HiveException: Unable to move source hdfs://hs01:8020/user/hundsun/dap/hive/hs_ods/ods_ses_acct_assure_scale/part_date=20210118/.hive-staging_hive_2021-09-01_11-47-31_216_694180642957006705-35/-ext-10000/000000_0 to destination hdfs://hs01:8020/user/hundsun/dap/hive/hs_ods/ods_ses_acct_assure_scale/part_date=20210118/000000_0 at org.apache.hadoop.hive.ql.metadata.Hive.handlePoolException(Hive.java:3422) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hadoop.hive.ql.metadata.Hive.moveFile(Hive.java:3367) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hadoop.hive.ql.metadata.Hive.replaceFiles(Hive.java:3697) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hadoop.hive.ql.metadata.Hive.loadPartitionInternal(Hive.java:1614) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hadoop.hive.ql.metadata.Hive.loadPartition(Hive.java:1525) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hadoop.hive.ql.metadata.Hive.loadPartition(Hive.java:1489) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hadoop.hive.ql.exec.MoveTask.execute(MoveTask.java:501) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hadoop.hive.ql.exec.Task.executeTask(Task.java:199) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hadoop.hive.ql.exec.TaskRunner.runSequential(TaskRunner.java:97) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hadoop.hive.ql.Driver.launchTask(Driver.java:2200) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hadoop.hive.ql.Driver.execute(Driver.java:1843) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:1563) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1339) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1334) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hive.service.cli.operation.SQLOperation.runQuery(SQLOperation.java:256) ~[hive-service-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] ... 11 more Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: Unable to move source hdfs://hs01:8020/user/hundsun/dap/hive/hs_ods/ods_ses_acct_assure_scale/part_date=20210118/.hive-staging_hive_2021-09-01_11-47-31_216_694180642957006705-35/-ext-10000/000000_0 to destination hdfs://hs01:8020/user/hundsun/dap/hive/hs_ods/ods_ses_acct_assure_scale/part_date=20210118/000000_0 at org.apache.hadoop.hive.ql.metadata.Hive.getHiveException(Hive.java:3449) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hadoop.hive.ql.metadata.Hive.getHiveException(Hive.java:3405) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hadoop.hive.ql.metadata.Hive.access$200(Hive.java:175) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hadoop.hive.ql.metadata.Hive$3.call(Hive.java:3350) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hadoop.hive.ql.metadata.Hive$3.call(Hive.java:3335) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] ... 4 more Caused by: java.io.IOException: rename for src path: hdfs://hs01:8020/user/hundsun/dap/hive/hs_ods/ods_ses_acct_assure_scale/part_date=20210118/.hive-staging_hive_2021-09-01_11-47-31_216_694180642957006705-35/-ext-10000/000000_0 to dest path:hdfs://hs01:8020/user/hundsun/dap/hive/hs_ods/ods_ses_acct_assure_scale/part_date=20210118/000000_0 returned false at org.apache.hadoop.hive.ql.metadata.Hive$3.call(Hive.java:3347) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] at org.apache.hadoop.hive.ql.metadata.Hive$3.call(Hive.java:3335) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2] ... 4 more 2021-09-01 11:47:46,962 INFO org.apache.hadoop.hive.conf.HiveConf: [HiveServer2-Handler-Pool: Thread-474]: Using the default value passed in for log id: d9b4646b-3c22-4589-8912-a68b92efcca7 2021-09-01 11:47:46,962 INFO org.apache.hadoop.hive.ql.session.SessionState: [HiveServer2-Handler-Pool: Thread-474]: Updating thread name to d9b4646b-3c22-4589-8912-a68b92efcca7 HiveServer2-Handler-Pool: Thread-474 2021-09-01 11:47:46,962 INFO org.apache.hive.service.cli.operation.OperationManager: [d9b4646b-3c22-4589-8912-a68b92efcca7 HiveServer2-Handler-Pool: Thread-474]: Closing operation: OperationHandle [opType=EXECUTE_STATEMENT, getHandleIdentifier()=29e01dfe-8b9b-427a-a610-1d58c526faca] 2021-09-01 11:47:46,973 INFO org.apache.hadoop.hive.conf.HiveConf: [d9b4646b-3c22-4589-8912-a68b92efcca7 HiveServer2-Handler-Pool: Thread-474]: Using the default value passed in for log id: d9b4646b-3c22-4589-8912-a68b92efcca7 2021-09-01 11:47:46,973 INFO org.apache.hadoop.hive.ql.session.SessionState: [d9b4646b-3c22-4589-8912-a68b92efcca7 HiveServer2-Handler-Pool: Thread-474]: Resetting thread name to HiveServer2-Handler-Pool: Thread-474 2021-09-01 11:47:47,025 INFO org.apache.hive.service.cli.thrift.ThriftCLIService: [HiveServer2-Handler-Pool: Thread-474]: Session disconnected without closing properly. 2021-09-01 11:47:47,025 INFO org.apache.hive.service.cli.thrift.ThriftCLIService: [HiveServer2-Handler-Pool: Thread-474]: Closing the session: SessionHandle [d9b4646b-3c22-4589-8912-a68b92efcca7] 2021-09-01 11:47:47,025 INFO org.apache.hive.service.CompositeService: [HiveServer2-Handler-Pool: Thread-474]: Session closed, SessionHandle [d9b4646b-3c22-4589-8912-a68b92efcca7], current sessions:0

从以上信息可以看到,hivesql 使用的是spark执行引擎,且 spark 作业已经执行成功了,但是在hiveser2做收尾工作时,移动临时文件到目标目录下时报错了,最底层的报错信息是 rename for src path xxx to dest path xxx returned false.

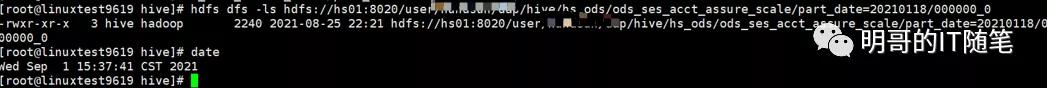

顺藤摸瓜,接下来去 hdfs 上查看问题。通过 hdfs dfs -ls 发现 hdfs上目标文件已经存在了,且通过时间信息可以发现该文件是几天前创建的,跟当前sql作业的执行没有关系:

hdfs-destination-path

问题原因

通过上述排查分析,问题直接原因已经清晰:hive sql 底层的spark作业已经执行成功,对应的数据已经计算完毕,但在移动临时结果文件到最终目标目录时,因为hdfs上最终目标目录已经存在且目标目录下存在同名文件,rename操作无法完成,作业执行失败。

回头看下我们的 sql,其本质就是个对分区表某个分区的 insert overwrite, 照道理来说,应该会覆盖目标分区对应的目录下的数据文件(即先删除旧的数据文件,再创建新数据文件),但为什么这里没有执行删除动作呢?

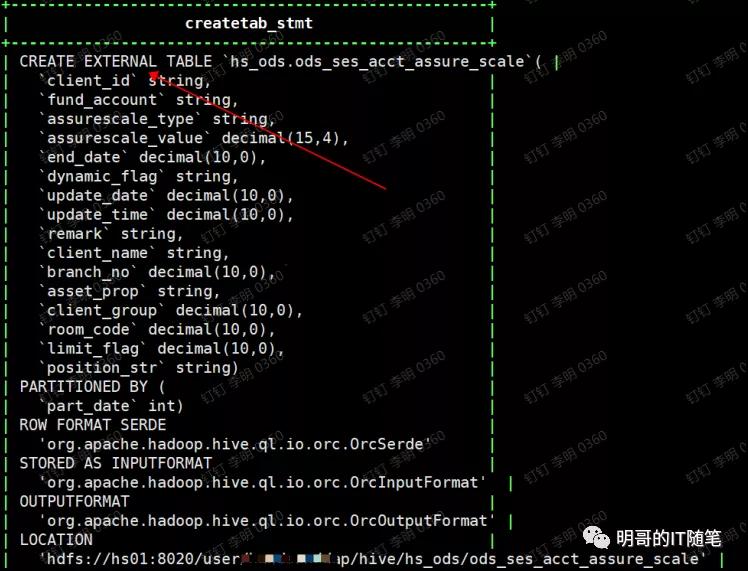

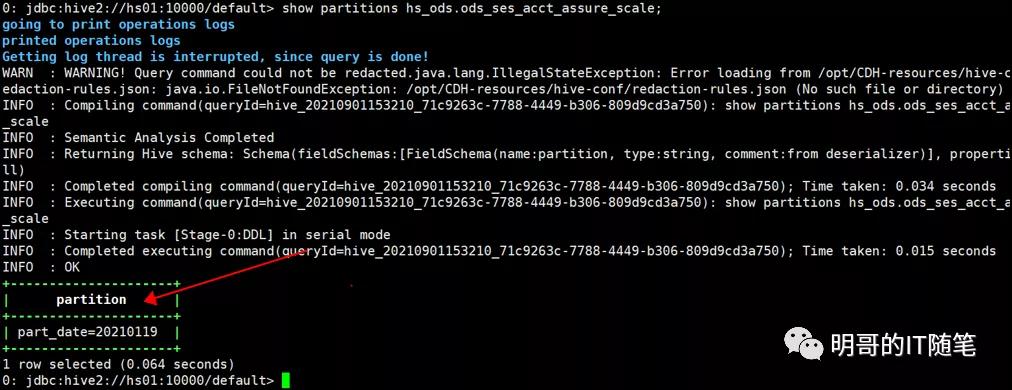

这其实是因为该分区表在 HIVE 中的元数据与 HDFS 中的数据不一致。通过 show create table 和 show partitions 可以发现,在HIVE元数据中该分区表只有一个分区,但HDFS上存在该表其它分区对应的目录和文件:

show create table

show partitions

所以问题的根本原因是:该分区表在 HIVE中的元数据与HDFS上实际的数据不一致,当执行 insert overwrite 操作时,hive 通过存储在 metastore 中的元数据信息发现目标分区并不存在,也就不会尝试去执行hdfs上该分区对应目录的删除操作了,而实际上hdfs上该分区对应的目录和文件都是存在的,所以作业底层的 rename 操作失败了。

问题解决

知道了问题的直接原因和根本原因,解决方法也就顺理成章了:修复 hive 元数据跟hdfs实际的数据一致即可。

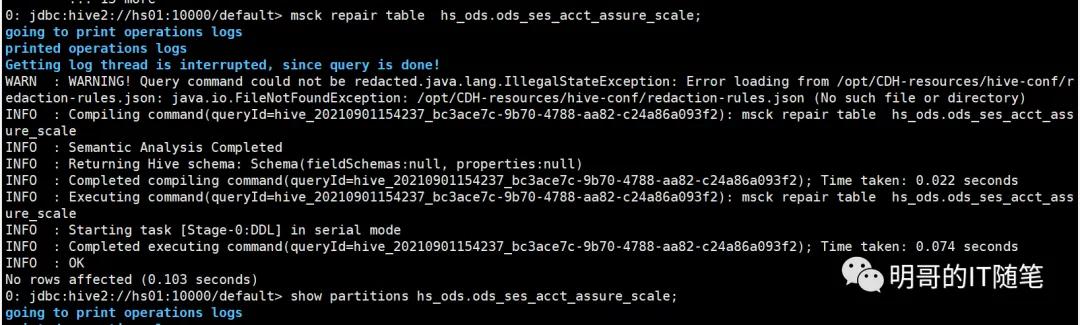

可以使用命令 msck repair table xxx来修复hive表的元数据:

元数据修复完毕,通过show partitions xx 发现,hive中已经可以查到原来遗失的分区。然后重新执行上述 insert overwrite 语句,作业成功执行。

问题总结 当 HIVE 中的元数据与 HDFS 上实际的数据不一致时,一些正常的 HIVE SQL 操作可能会执行失败。 HIVE 中的元数据与 HDFS 上实际的数据不一致的原因有很多,常见的有: 使用了 HIVE 外表,由于外表的特性,在HIVE 中删除外表或外表的某些分区时, HDFS上对应的目录和文件仍会存在,此时就会造成不一致;(我们这里就是这种情况) HIVE 的元数据和底层HDFS的数据是从其他集群同步过来的,但同步过程中有问题,比如时间没对齐状态不一致;(跨集群同步处理不善,会有这种情况) HIVE中的元数据或HDFS上的数据有损坏和丢失。(集群运维管理不规范,会造成这种现象) 可以通过 msck repair table xxx 来修复 hive表的元数据: MSCK [REPAIR] TABLE table_name [ADD/DROP/SYNC PARTITIONS];